Discord In App Reporting

A flexible reporting flow facilitating a safer Discord.

Discord In App Reporting

A flexible reporting flow facilitating a safer Discord.

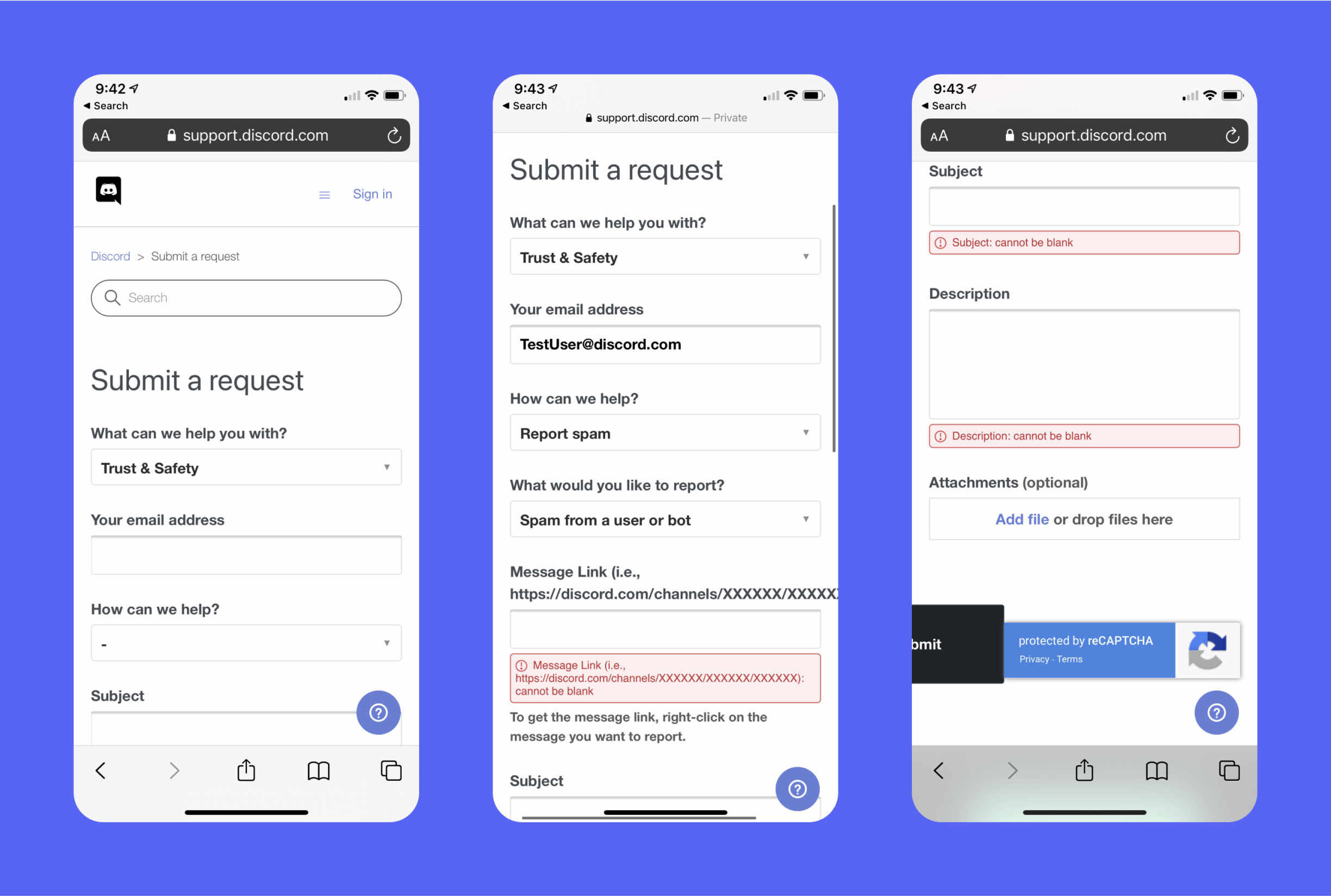

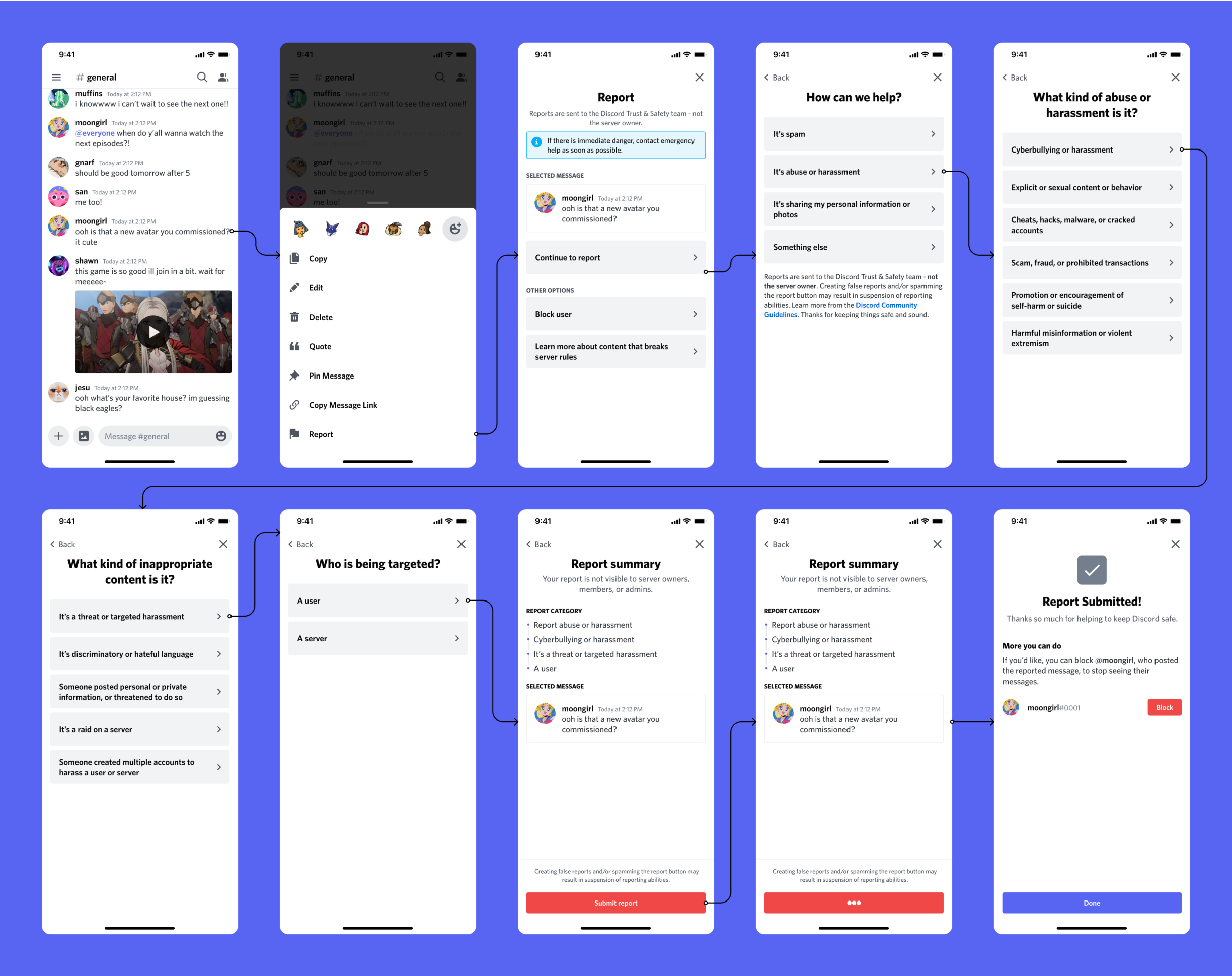

I led design on the updated in app reporting feature to help people get problematic content to the right place where action could be taken. Discord's reporting feature was not mobile friendly, as it relied on a ton of dropdowns and also wasn't really fully responsive. It also required users to manually enter hard to find information like message IDs.

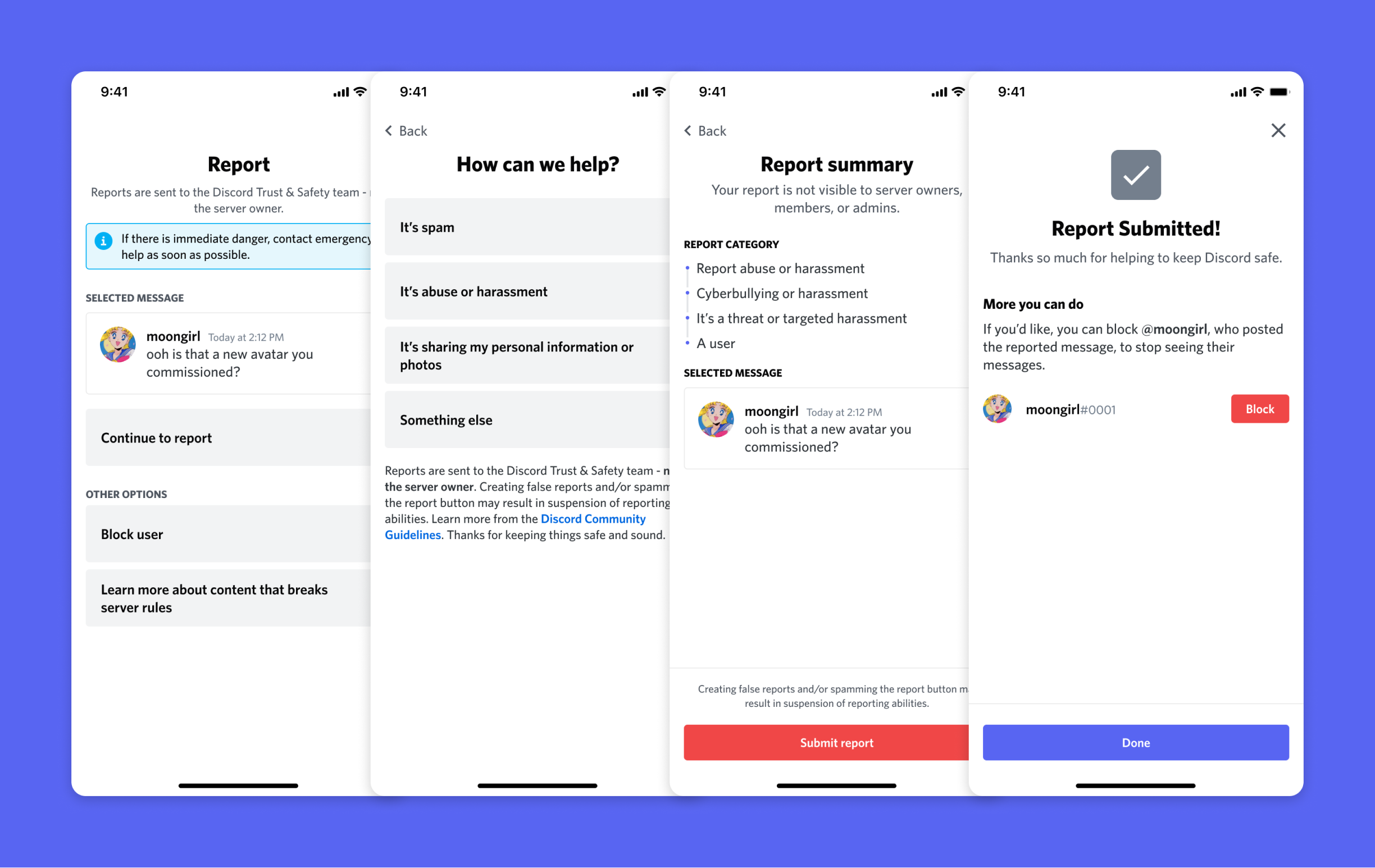

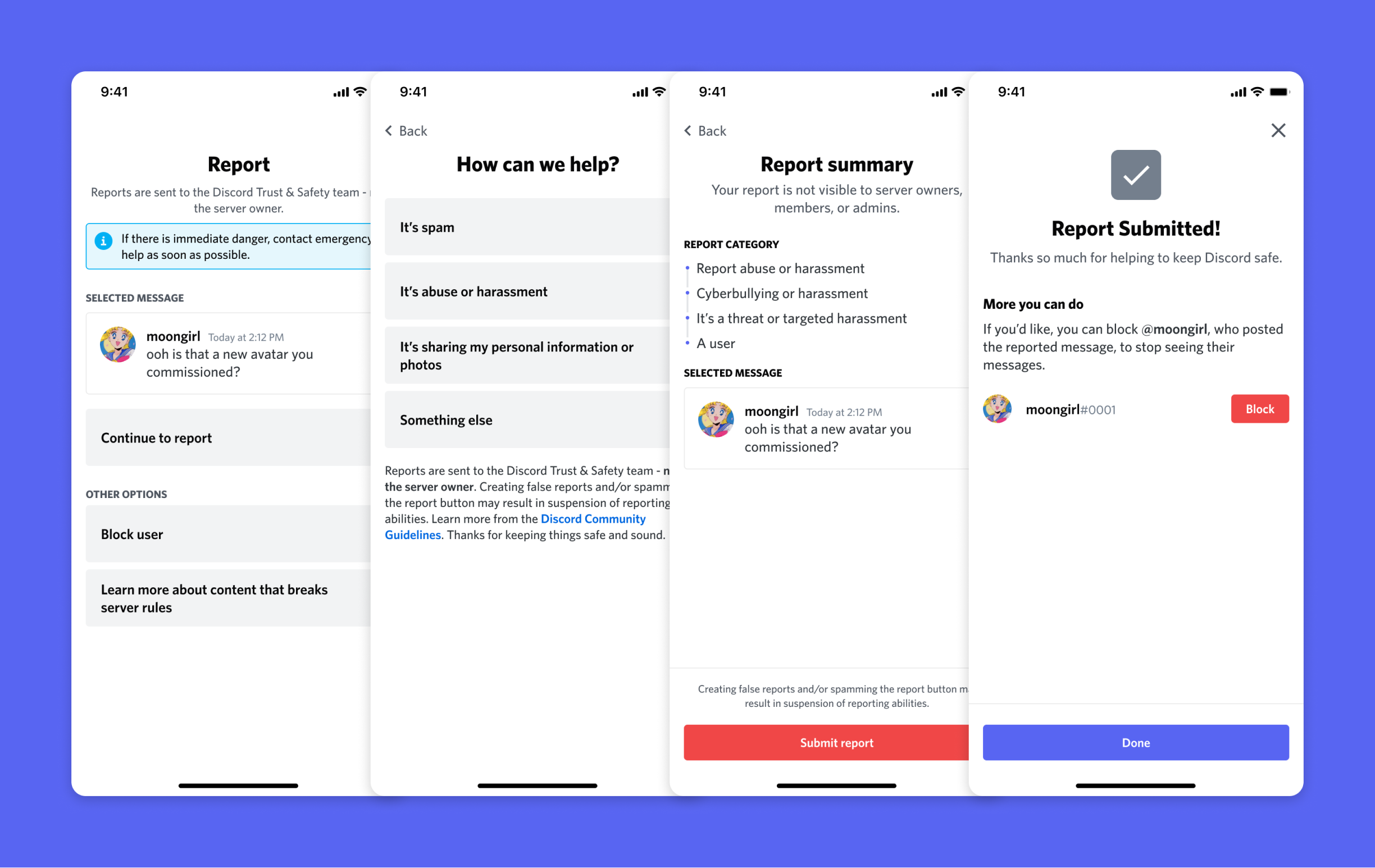

The full flow focused on breaking up the info collection into managable chunks. The components were designed to be modular and simple enough that if the Trust & Safety team needed to change the structure later on, it would be no big deal to reorder elements.

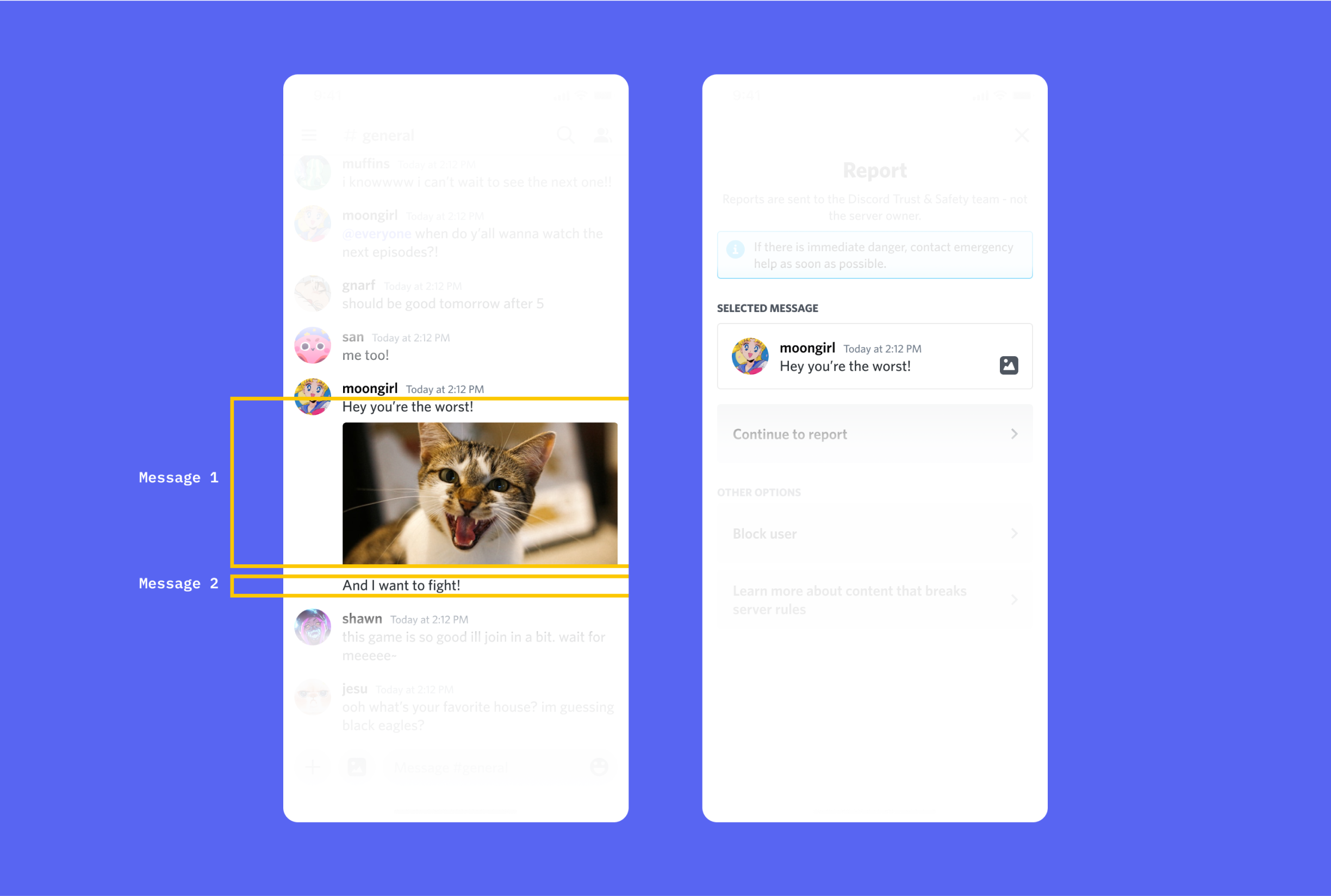

Reporting a message can be an emotionally turbulent time for a user and I wanted to support anywhere possible in the process. This culminated in a few UI details that I’m proud of. The first is a built in message preview to give the user confidence that they selected the correct item. This might not seem totally necessary if you haven’t used Discord a ton in the past, but the density of chat and the way that multiple messages get grouped together can sometimes be confusing. To combat this, the first screen of the report included a message preview. If the message contained media, it’s likely that it could have been disturbing content, so we would obscure that content so the user wasn’t confronted with it again.

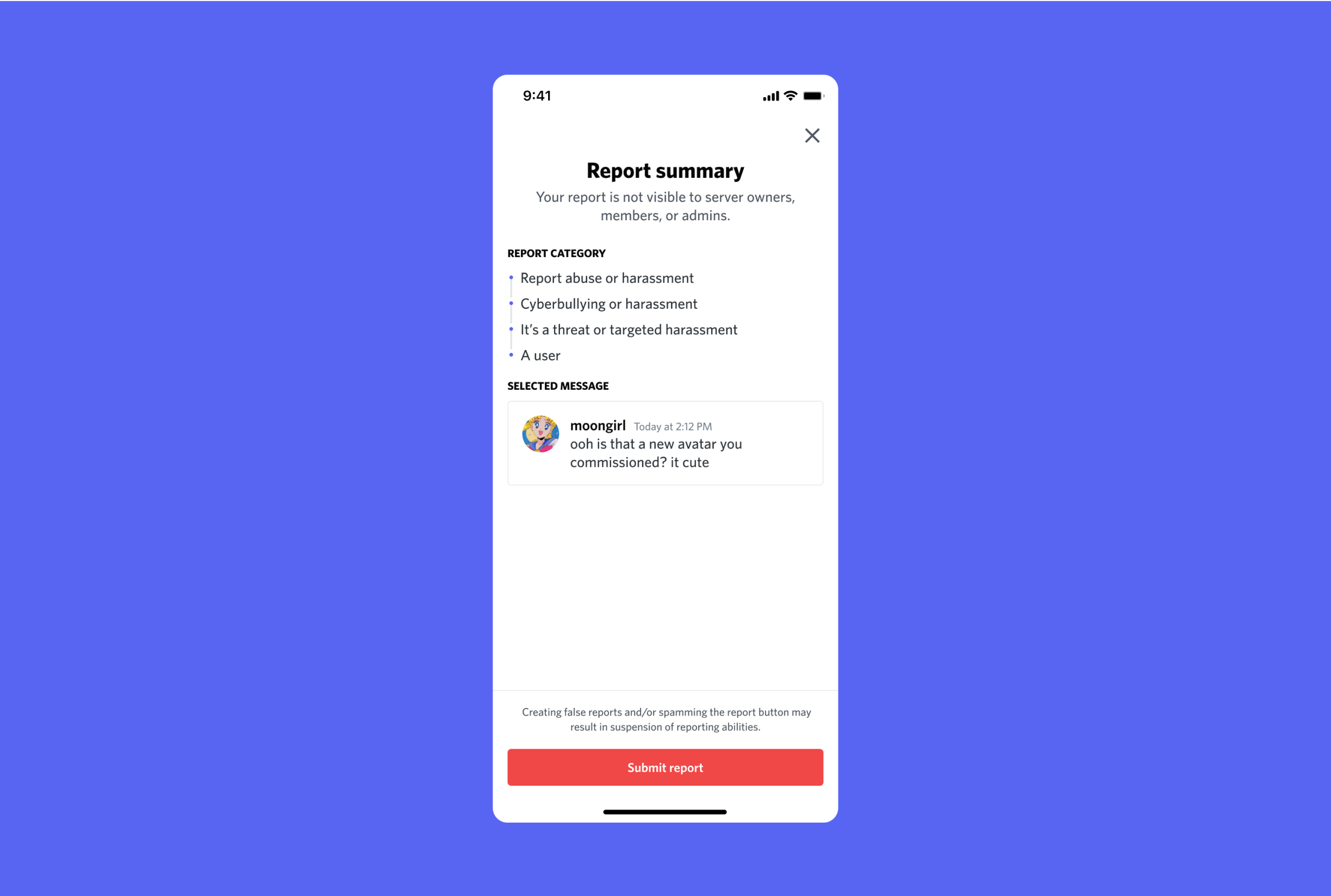

The review page also shipped with a custom breadcrumb-like component so the user could see exactly how their report was categorized. The selected message preview appeared once more on the final summary screen so the user could have a full overview before submitting.

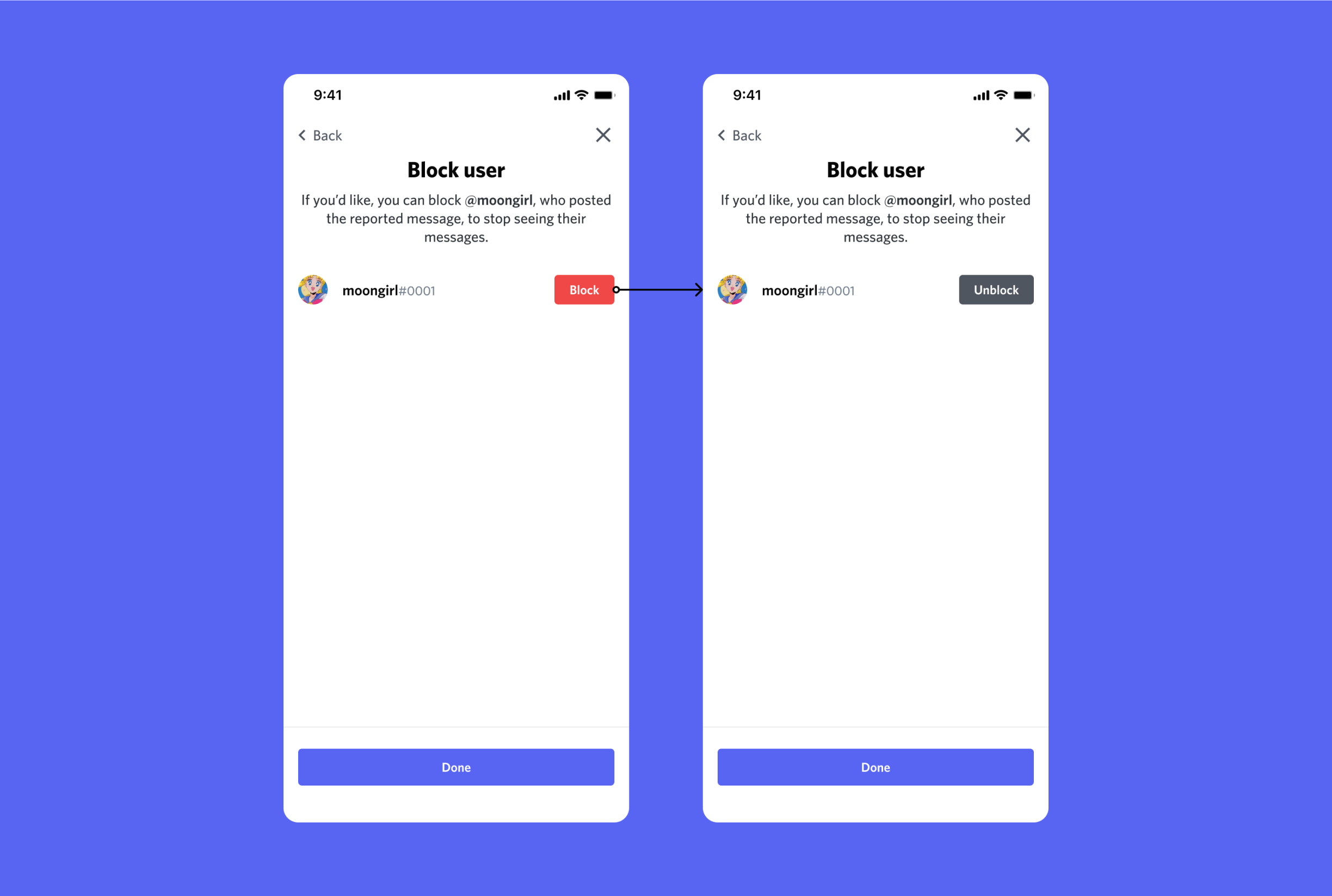

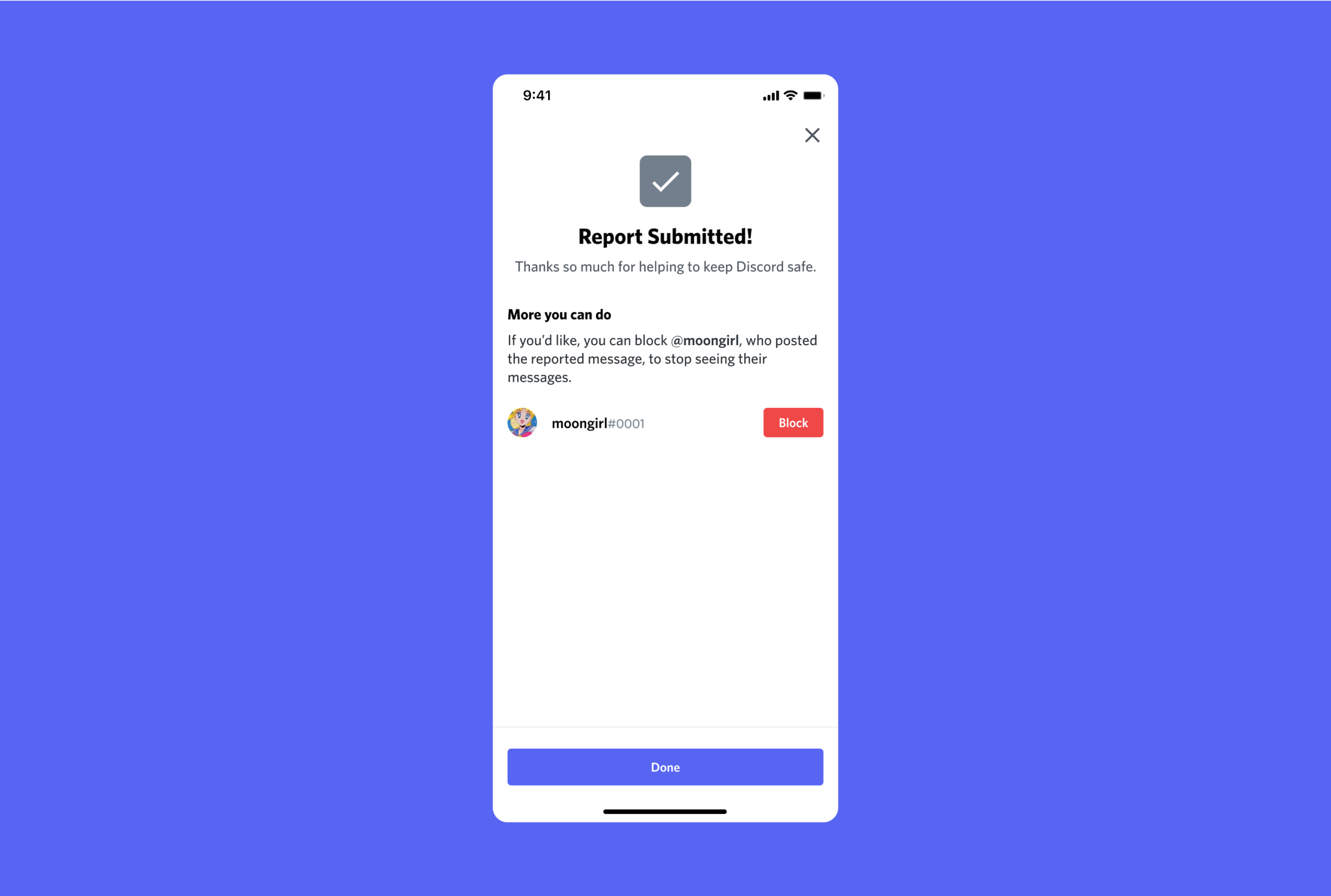

Another key area of supporting the user was giving them options in lieu of, or in addition to, the report itself. The first way we did that was giving the user opportunities to block the author of the reported message. This way they could go back to the chat and not have to confront more toxic messages from the same user.

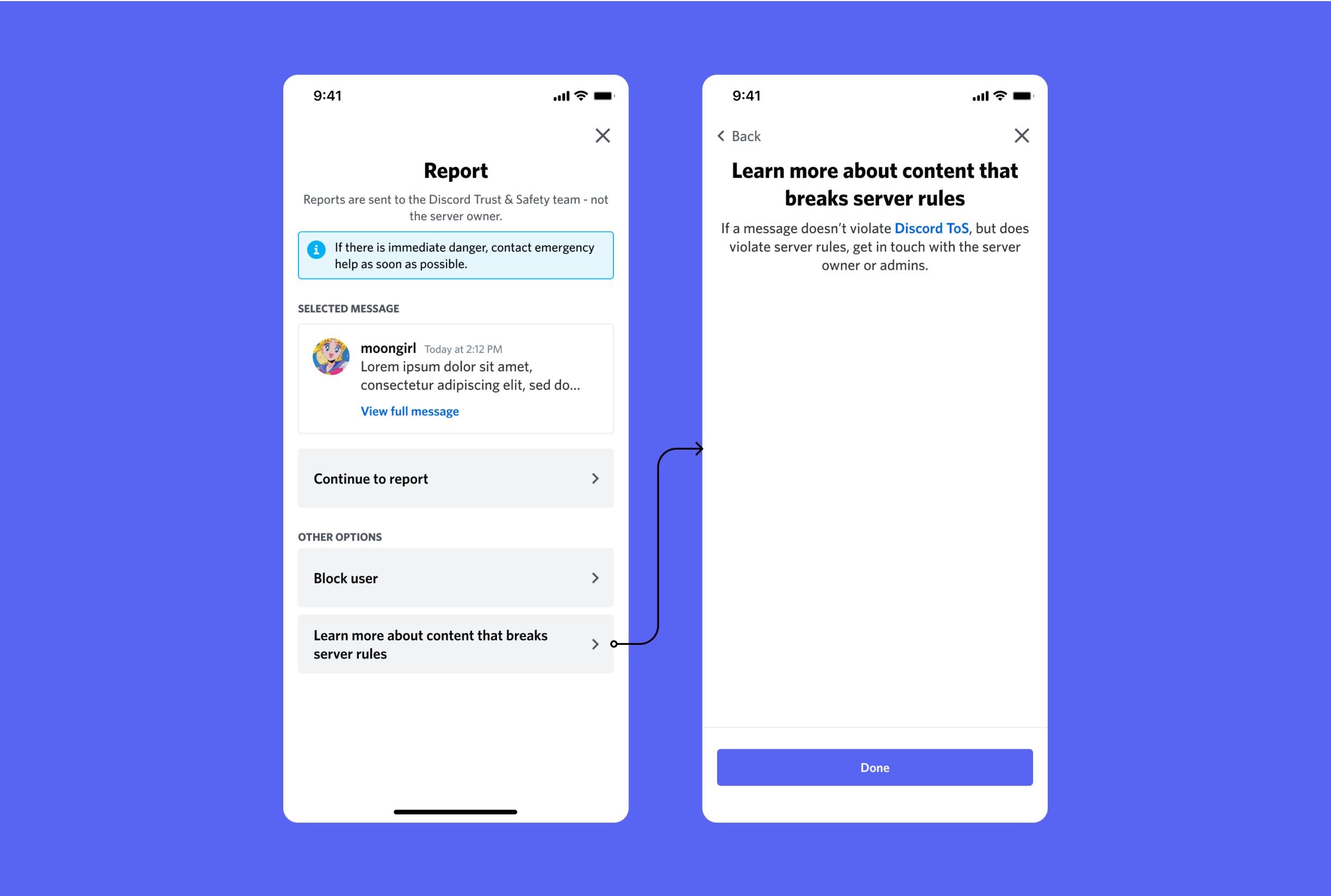

One other goal was to offer users options besides a report when appropriate. This would help lower the volume of reports to Trust & Safety so they could keep up with the influx, and for the user it could be an educational moment.